Loopy: Taming Audio-Driven Portrait Avatar with Long-Term Motion Dependency

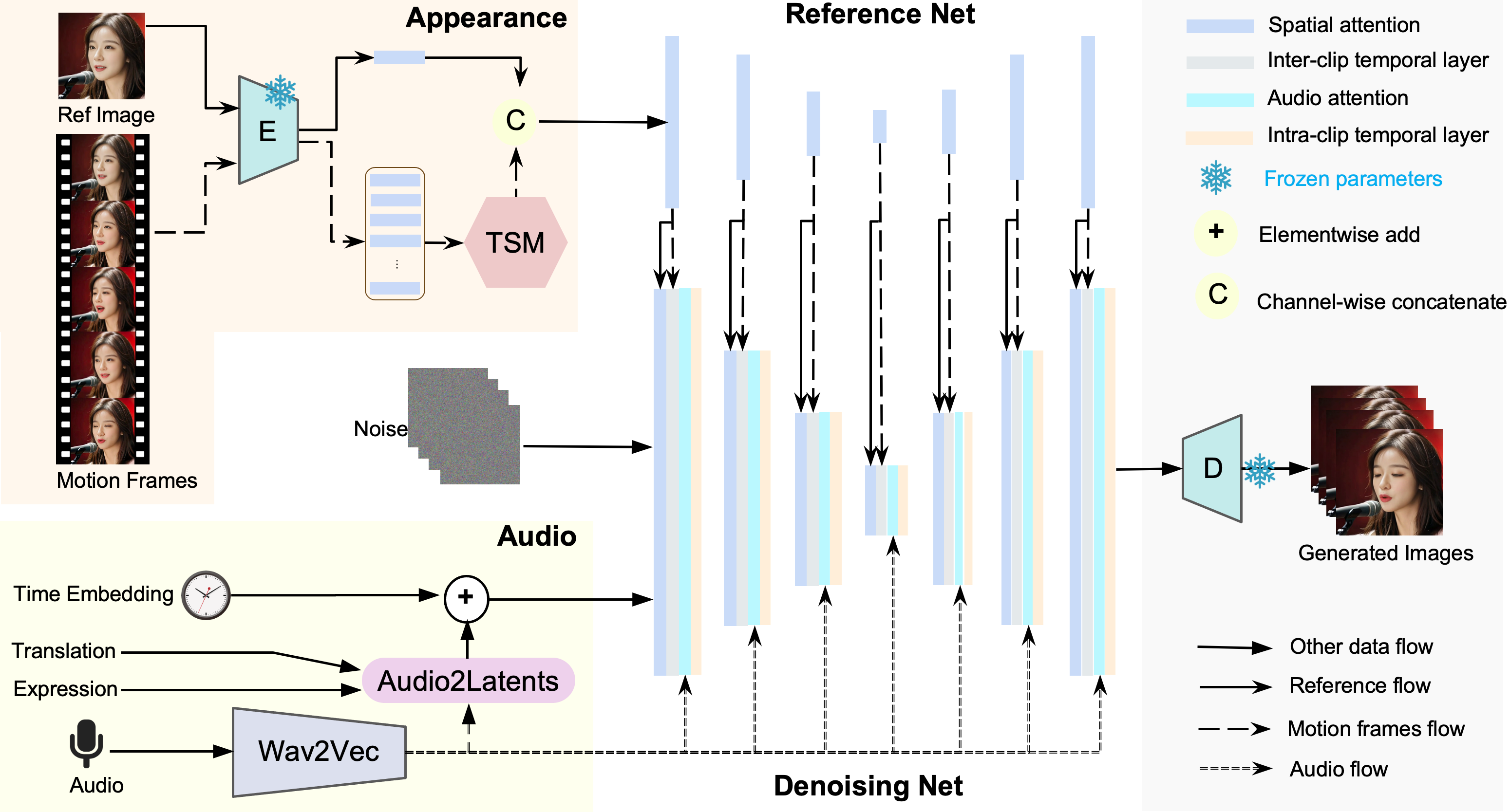

Jianwen Jiang1*†, Chao Liang1*, Jiaqi Yang1*, Gaojie Lin1, Tianyun Zhong2‡, Yanbo Zheng11Bytedance,2Zhejiang University *Equal contribution,†Project lead,‡Internship at Bytedance TL;DR: we propose an end-to-end audio-only conditioned video diffusion model named Loopy. Specifically, we designed an inter- and intra-clip temporal module and an audio-to-latents module, enabling the model to leverage long-term motion information from the data to learn natural motion patterns and improving audio-portrait movement correlation. This method removes the need for manually specified spatial motion templates used in existing methods to constrain motion during inference, delivering more lifelike and high-quality results across various scenarios.

Generated Videos

Loopy supports various visual and audio styles. It can generate vivid motion details from audio alone, such as non-speech movements like sighing, emotion-driven eyebrow and eye movements, and natural head movements.

* Note that all results in this page use the first frame as reference image and conditioned on audio only without need of spatial conditions as templates.

Motion Diversity

Loopy can generate motion-adapted synthesis results for the same reference image based on different audio inputs, whether they are rapid, soothing, or realistic singing performances.

Singing

Additional results demonstrating singing

More Video Results

Additional results about non-human realistic images

Loopy also supports input images with side profiles effectively

More results about realistic portrait inputs

Comparison with Recent Methods

Ethics Concerns

The purpose of this work is only for research. The images and audios used in these demos are from public sources. If there are any concerns, please contact us (jianwen.alan@gmail.com) and we will delete it in time.

Acknowledgement

Some figures about film and interview in More Video Results are from Celebv-HQ. Some test audios and images are from Echomimic, EMO, and VASA-1 et al. Thanks to these great work!

BibTeX

If you find this project is useful to your research, please cite us:

@article{jiang2024loopy,

title={Loopy: Taming Audio-Driven Portrait Avatar with Long-Term Motion Dependency},

author={Jiang, Jianwen and Liang, Chao and Yang, Jiaqi and Lin, Gaojie and Zhong, Tianyun and Zheng, Yanbo},

journal={arXiv preprint arXiv:2409.02634},

year={2024}

}